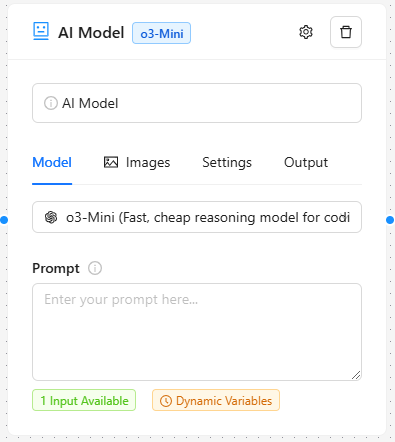

The AI Model Node is a powerful component that allows you to integrate state-of-the-art AI language models into your workflows. This node supports multiple AI providers and models, offering flexibility and options for different use cases and requirements.

Overview #

The AI Model Node enables you to:

- Select from a wide range of AI models from different providers

- Configure model parameters for optimal output

- Process both text and image inputs

- Control the length and style of generated content

- Fine-tune the balance between creativity and consistency

Available Models #

We continuously update our model selections as new models are released and made available. Here’s our current lineup:

OpenAI (Direct Integration) #

- o1: Advanced reasoning model optimized for complex problem-solving

- o1-Mini: Fast, cost-effective model for coding, mathematics, and scientific tasks

- o3-Mini: Efficient model balancing speed and reasoning capabilities

- o3-Mini-High: Enhanced version of o3-Mini with improved performance

- GPT-4o: High-intelligence flagship model for sophisticated tasks

- GPT-4o-Mini: Cost-effective variant maintaining strong capabilities

Models via OpenRouter #

Anthropic Models #

- Claude 3.5 Sonnet: Balanced model for general tasks

- Claude 3.5 Haiku: Fast, efficient model for shorter outputs

- Claude 3 Opus: Advanced model for complex, lengthy tasks

- Claude 3 Haiku: Streamlined model for quick responses

Meta Llama Models #

- Llama 3.3 70B Instruct: Large-scale model for comprehensive tasks

- Llama 3.2 Vision Instruct: Specialized model for image and text processing

- Other Llama variants: Various sizes and capabilities available

Google Models #

- Gemini 2.0 Flash: Latest experimental model

- Gemini Flash variants: Different sizes and capabilities

- Gemma 2 9B IT: Free variant available for testing

Other Providers #

- Perplexity: Sonar and Sonar Reasoning models

- Mistral: Including Pixtral 2 and Mistral Large 2

- xAI: Grok 2 and Grok 2 Vision

- DeepSeek: R1 and Chat variants

Model Parameters #

Core Parameters #

Temperature (0.0 – 2.0) #

- Controls response creativity and randomness

- Lower values (0.0): More focused, deterministic responses

- Higher values (2.0): More creative, diverse outputs

- Default: 1.0

- Use case: Adjust based on whether you need consistent, factual responses (low) or creative, varied outputs (high)

Top P (0.0 – 1.0) #

- Nucleus sampling parameter

- Controls response diversity through probability distribution

- Lower values: More focused on highly probable tokens

- Higher values: Considers a broader range of possibilities

- Default: 1.0

- Use case: Fine-tune output predictability while maintaining coherence

Max Tokens (1 – Model Specific) #

- Limits the length of the generated response

- Varies by model (e.g., Claude 3 Opus: 200,000, GPT-4o: 128,000)

- Higher values allow longer outputs but may increase costs

- Use case: Control response length and processing costs

Advanced Parameters #

Frequency Penalty (-2.0 to 2.0) #

- Influences word repetition based on frequency

- Positive values: Discourage frequent token use

- Negative values: Encourage frequent token use

- Default: 0.0

- Use case: Control vocabulary diversity and repetition

Presence Penalty (-2.0 to 2.0) #

- Affects repetition of specific input tokens

- Positive values: Reduce repetition of input terms

- Negative values: Encourage use of input terms

- Default: 0.0

- Use case: Balance between new and referenced content

Repetition Penalty (0.0 to 2.0) #

- Controls direct repetition in outputs

- Higher values: Stronger prevention of repetition

- Lower values: More natural, potentially repetitive text

- Default: 1.0

- Use case: Prevent unwanted repetition while maintaining coherence

Features #

Text Input #

- Rich text editor for prompts

- Support for dynamic input tags from previous nodes

- Variable substitution capabilities

- Multi-line input support

Image Input #

- Multiple image URL inputs

- Support for dynamic image URLs from previous nodes

- Useful for vision-enabled models

- Compatible with image analysis and generation tasks

Output Handling #

- Structured output format

- Easy integration with downstream nodes

- Consistent output format across models

Best Practices #

- Model Selection

- Choose models based on task complexity

- Consider cost-effectiveness for simpler tasks

- Use specialized models for specific needs (vision, code, etc.)

- Parameter Tuning

- Start with default values

- Adjust temperature first for desired creativity level

- Fine-tune other parameters based on output quality

- Test with small token limits before scaling up

- Input Formatting

- Be specific in prompts

- Use system messages for context

- Include relevant examples when needed

- Structure complex prompts clearly

- Cost Optimization

- Use smaller models for simple tasks

- Set appropriate token limits

- Consider using free models for testing

- Monitor usage patterns

Updates and Maintenance #

We maintain an active update schedule for our AI Model Node:

- New models are added as they become available

- Existing models are updated to latest versions

- Parameters are adjusted based on provider updates

- Documentation is regularly updated to reflect changes

Our team monitors the AI landscape and integrates new capabilities as they emerge, ensuring you always have access to the latest advancements in AI technology.