Overview #

The Web scraper/crawler node is a powerful tool that allows you to scrape individual web pages or crawl entire websites to extract data. It integrates with the Firecrawl API to provide advanced web scraping and crawling capabilities within your AI workflows.

Features of Web Scraper/Crawler #

- Two operation modes:

- Scrape: Extract data from a single web page.

- Crawl: Navigate through multiple pages of a website and extract data.

- Flexible Output Formats: Choose from various output formats, including Markdown, HTML, Raw HTML, Links, Screenshots, and more.

- Content Filtering: Specify which HTML tags to include or exclude in your scraping results.

- Extraction capabilities: Use AI-powered extraction with either prompts or schema-based approaches.

- Crawl Control: Set depth, limits, and navigation rules for crawling operations.

- Customizable Timing: Set wait times and timeouts for more precise control over the scraping process.

How to Use Web Scraper/Crawler Node #

1. Basic Setup #

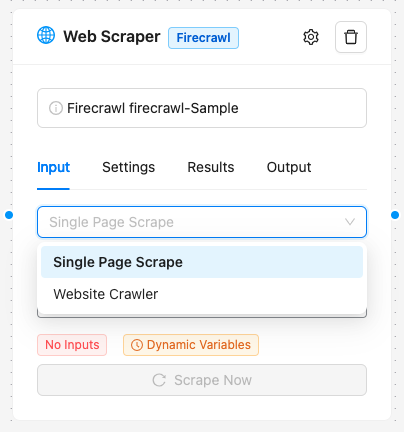

- Drag the Web Scraper/Crawler node onto your workflow canvas.

- Set a custom name for your node (optional).

- Choose the operation type: Scrape or Crawl.

- Enter the URL of the website you want to scrape or use as the starting point for crawling.

2. Scrape Operation #

If you select “Scrape”, you’ll have the following options:

- Format: Choose the output format (Markdown, HTML, Raw HTML, Links, Screenshot, Extract, Full Page Screenshot).

- Only Main Content: Toggle to scrape only the main content of the page, excluding headers, footers, etc.

- Include/Exclude Tags: Specify HTML tags to include or exclude in the scrape result.

- Wait For: Set a delay (in milliseconds) before fetching the content.

- Timeout: Set a timeout (in milliseconds) for the request.

Extraction Options #

If you choose “Extract” as the format, you have two sub-options:

- Prompt: Enter a natural language prompt to guide the AI in extracting specific information. For example: “Extract the company mission statement from this website.”

- Schema: Define a schema of fields to extract, specifying the name and data type for each field.

3. Crawl Operation #

If you select “Crawl”, you’ll have these options:

- Max Depth: Set the maximum depth to crawl relative to the entered URL.

- Ignore Sitemap: Choose whether to ignore the website’s sitemap during crawling.

- Limit: Set the maximum number of pages to crawl.

- Allow Backward Links: Enable to allow the crawler to navigate to previously linked pages.

- Allow External Links: Enable to allow the crawler to follow links to external websites.

Best Practices #

- Start Small: When crawling, start with a small limit and gradually increase it to avoid overloading the target server.

- Respect Robots.txt: Ensure you’re not violating the target website’s robots.txt file.

- Use Delays: Implement reasonable delays between requests to avoid being blocked.

- Be Specific: When using the extract feature, be as specific as possible in your prompts or schema definitions.

Troubleshooting Web Scraper/Crawler Node #

- If you’re not getting the expected results, try adjusting the “Wait For” time to allow dynamic content to load.

- If you’re being blocked by websites, try reducing your crawl speed or using the “Only Main Content” option.

- For extraction issues, experiment with different prompts or refine your schema.

Limitations #

- The Web Scraper/Crawler node is subject to the limitations of the Firecrawl API, including any rate limits or usage restrictions.

- Some websites may employ anti-scraping measures that could affect the node’s functionality.

Ethical Considerations #

- Always ensure you have the right to scrape or crawl a website. Some websites prohibit these activities in their terms of service.

- Be mindful of the load you’re placing on the target servers, especially when crawling.

- Respect the privacy and copyright of the data you’re collecting by Web Scraper/Crawler node.

Advanced Usage #

- Dynamic URLs: You can use the input handler to dynamically set the URL based on outputs from previous nodes in your workflow.

- Chaining: Use the output of a crawl operation as input for other nodes in your workflow for further processing or analysis.

- Custom Extraction: Combine the schema-based extraction with custom AI nodes for sophisticated data extraction and transformation pipelines.

API Reference #

For more detailed information about the underlying API calls and response formats, refer to the Firecrawl API documentation.